Environment infrastructure design

This chapter is one of a series that make up the Omnissa Workspace ONE and Horizon Reference Architecture, a framework that provides guidance on the architecture, design considerations, and deployment of Omnissa Workspace ONE and Omnissa Horizon solutions. This chapter provides information about the design of the supporting environmental infrastructure.

Introduction

Several environment resources are required to support an Omnissa Workspace ONE and Omnissa Horizon deployment. In most cases these will already exist. It is important to ensure that minimum version requirements are met and that any specific configuration for Workspace ONE and Horizon is followed. For any supporting infrastructure component that Workspace ONE or Horizon depends on, that component must be designed to be scalable and highly available. Some key items are especially important when the environment is used for a multi-site deployment.

Environment design

As might be expected, several environment resources might be required to support a Workspace ONE and Horizon deployment, including Active Directory, DNS, DHCP, security certificates, databases, and load balancers. For any external component that Workspace ONE or Horizon depends on, the component must be designed to be scalable and highly available, as described in this section.

The following components should not be treated as an exhaustive list.

Active Directory

Horizon 8 requires an Active Directory domain structure for user authentication and management. Standard best practices for an Active Directory deployment must be followed to ensure that it is highly available.

Because cross-site traffic should be avoided wherever possible, configure AD sites and services so that each subnet used for desktops and services is associated with the correct site. This guarantees that lookup requests, DNS name resolution, and general use of AD are kept within a site where possible. This is especially important in terms of Microsoft Distributed File System Namespace (DFS-N) to control which specific file server users get referred to.

Table 1: Implementation strategy for Active Directory Domain Controllers

| Decision | Active Directory domain controllers will run in each location. Core data center locations may have multiple domain controllers. |

| Justification | This provides domain services close to the consumption. Multiple domain controllers ensure resilience and redundancy. |

For Omnissa Horizon 8 specifics, see the Preparing Active Directory for details on supported versions and preparation steps. For Omnissa Horizon Cloud Service specifics, see Setting up Your Active Directory Domain.

Additionally, for Horizon usage, whether Horizon or Horizon Cloud, set up dedicated organizational units (OUs) for the machine accounts for virtual desktops and RDSH servers. Consider blocking inheritance on these OUs to stop any existing GPOs from having an undesired effect.

Group Policy

Group Policy objects (GPOs) can be used in a variety of ways to control and configure both Horizon Cloud Service on Microsoft Azure components and also standard Windows settings.

These policies are normally applied to the user or the computer Active Directory account, depending on where the objects are located in Active Directory. In a Horizon Cloud Service on Microsoft Azure environment, it is typical to set specific user policy settings for the specific Horizon Cloud Service session only when a user connects to it.

We also want to have user accounts processed separately from computer accounts with GPOs. This is where the loopback policy is widely used in any GPO that also needs to configure user settings. This is particularly important with Dynamic Environment Manager. Dynamic Environment Manager applies only user settings, so if the Dynamic Environment Manager GPOs are applied to computer objects, loopback processing must be enabled.

Group policies can also be associated at a site level. Refer to the Microsoft Web site for details.

DNS

The Domain Name System (DNS) is widely used in a Workspace ONE and a Horizon environment, from server components communication to clients and virtual desktops. Follow standard design principles for DNS, making it highly available. Additionally, ensure that:

- Forward and reverse zones are working well.

- Dynamic updates are enabled so that desktops register with DNS correctly.

- Scavenging is enabled and tuned to cope with the rapid cloning and replacement of virtual desktops.

Table 2: Implementation strategy for DNS

| Decision | DNS were provided by using Active Directory-integrated DNS zones. The DNS service ran on select Windows Servers running the domain controller roles. |

| Justification | The environment already had DNS servers with Active Directory-integrated DNS zones |

DHCP

In a Horizon environment, desktops and RDSH servers rely on DHCP to get IP addressing information. DHCP must be allowed on the VM networks designated for these virtual desktops and RDSH servers.

In Horizon multi-site deployments, the number of desktops a given site is serving usually changes when a failover occurs. Typically, the recommendation is to over-allocate a DHCP range to allow for seamlessly rebuilding pools and avoiding scenarios where IPs are not being released from the DHCP scope for whatever reason.

For example, take a scenario where 500 desktops are deployed in a single subnet in each site. This would normally require, at a minimum, a /23 subnet range for normal production. To ensure additional capacity in a failover scenario, a larger subnet, such as /21, might be used. The /21 subnet range would provide approximately 2,000 IP addresses, meeting the requirements for running all 1,000 desktops in either site during a failover while still leaving enough capacity if reservations are not released in a timely manner.

It is not a requirement that the total number of desktops be run in the same site during a failover, but this scenario was chosen to show the most extreme case of supporting the total number of desktops in each site during a failover.

There are other strategies for addressing this issue. For example, you can use multiple /24 networks across multiple desktop pools or dedicate a subnet size you are comfortable using for a particular desktop pool. The most important consideration is that there be enough IP address leases available.

The environment can be quite fluid, with instant-clone desktops being deleted at logout and recreated when a pool dips below the minimum number. For this reason, make sure the DHCP lease period is set to a relatively short period. The amount of time depends on the frequency of logouts and the lifetime of a clone.

Table 3: Implementation strategy for DHCP

| Decision | DHCP was available on the VM network for desktops and RDSH servers. DHCP failover was implemented to ensure availability. The lease duration of the scopes used was set to 4 hours. |

| Justification | DHCP is required for Horizon environments. Virtual desktops can be short lived so a shorter lease period ensures that leases are released quicker. A lease period of 4 hours is based on an average logout after 8 hours. |

Microsoft Azure has a built-in DHCP configuration that is a part of every VNet configured in Microsoft Azure. For more information, see IP Configurations in the Microsoft Azure documentation.

Distributed File System

File shares are critical in delivering a consistent user experience. They store various types of data used to configure or apply settings that contribute to a persistent-desktop experience.

The data can include the following types:

- IT configuration data, as specified in Dynamic Environment Manager

- User settings and configuration data, which are collected by Dynamic Environment Manager

- Windows mandatory profile

- User data (documents, and more)

- ThinApp packages

The design requirement is to have no single point of failure within a site while replicating the above data types between the two data centers to ensure their availability in a site-failure scenario. This reference architecture uses Microsoft Distributed File System Namespace (DFS-N) with array-level replication.

Table 4: Implementation Strategy for Replicating Data to Multiple Sites

| Decision | DFS-Replication (DFS-R) was used to replicate user data from server to server and optionally between sites. |

| Justification | Replication between servers provides local redundancy. Replication between sites provides site redundancy. |

DFS namespace

The namespace is the referred entry point to the distributed file system.

- A single-entry point is enabled and active for profile-related shares to comply with the Microsoft support statements (for example, Dynamic Environment Manager user settings).

- Other entry points can be defined but turned off to stop user referrals to them. They can then be made active in a recovery scenario.

- Multiple active entry points are possible for shares that contain data that is read-only for end users (for example, Dynamic Environment Manager IT configuration data, Windows mandatory profile, ThinApp packages).

Table 5: Implementation strategy for managing entry points to the file system

| Decision | DFS-Namespace (DFS-N) was used. Depending on the data type and user access required, either one or multiple referral entry points may be enabled. |

| Justification | DFS-N provides a common namespace to the multiple referral points of the user data that is replicated by DFS-R. |

More detail on how DFS design applies to profile data can be found in Dynamic Environment Manager Architecture.

Certificate Authority

A Microsoft Enterprise Certificate Authority (CA) is often used for certificate-based authentication, SSO, and email protection. A certificate template is created within the Microsoft CA and is used by Workspace ONE® UEM to sign certificate-signing requests (CSRs) that are issued to mobile devices through the Certificate Authority integration capabilities in Workspace ONE UEM and Active Directory Certificate Services.

The Microsoft CA can be used to create CSRs for Unified Access Gateway, Workspace ONE Access, and any other externally facing components. The CSR is then signed by a well-known external CA to ensure that any device connecting to the environment has access to a valid root certificate.

Having a Microsoft Enterprise CA is a prerequisite for Horizon True SSO. A certificate template is created within the Microsoft CA and is used by True SSO to sign CSRs that are generated by the VM. These certificates are short-lived (approximately 1 hour) and are used solely for the purpose of single-signing a user in to a desktop through Workspace ONE Access without prompting for AD credentials.

Details on setting up a Microsoft CA can be found in the Setting Up True SSO section in Horizon 8 Configuration.

Table 6: Implementation strategy for the Certificate Authority Server

| Decision | A Microsoft Enterprise CA was set up. |

| Justification | This can be used to support certificate authentication for Windows 10 devices and to support the Horizon True SSO capability. |

Microsoft RDS licensing

Applications published with Horizon use Microsoft RDSH servers as a shared server platform to host Windows applications. Microsoft RDSH servers require licensing through a Remote Desktop Licensing service. It is critical to ensure that the Remote Desktop Licensing service is highly available within each site and also redundant across sites.

Table 7: Implementation strategy for Microsoft RDS licensing

| Decision | Multiple RDS Licensing servers were configured. At least one was configured per site. |

| Justification | This provides licensing for Microsoft RDSH servers where used for delivering Horizon published applications. |

Microsoft Key Management Service

To activate Windows (and Microsoft Office) licenses in a VDI environment, it is recommended to use Microsoft Key Management Service (KMS) with volume license keys. Because desktops are typically deleted at logout and are recreated frequently, it is important that this service be highly available. See the Microsoft documentation on how best to deploy volume activation. It is critical to ensure that the KMS service is highly available within each site and also redundant across sites.

Table 8: Implementation strategy for the KMS service

| Decision | Microsoft KMS was deployed in a highly available manner |

| Justification | This allows Horizon virtual desktops and RDSH servers to activate their Microsoft licenses. |

Load balancing

To remove a single point of failure from server components, we can deploy more than one instance of the component and use a load balancer. This not only provides redundancy but also allows the load and processing to be spread across multiple instances of the component. To ensure that the load balancer itself does not become a point of failure, most load balancers allow for setup of multiple nodes in an HA or active/passive configuration.

VMware NSX

NSX provides network-based services such as security, virtualization networking, routing, and switching in a single platform. These capabilities are delivered for the applications within a data center, regardless of the underlying physical network and without the need to modify the application.

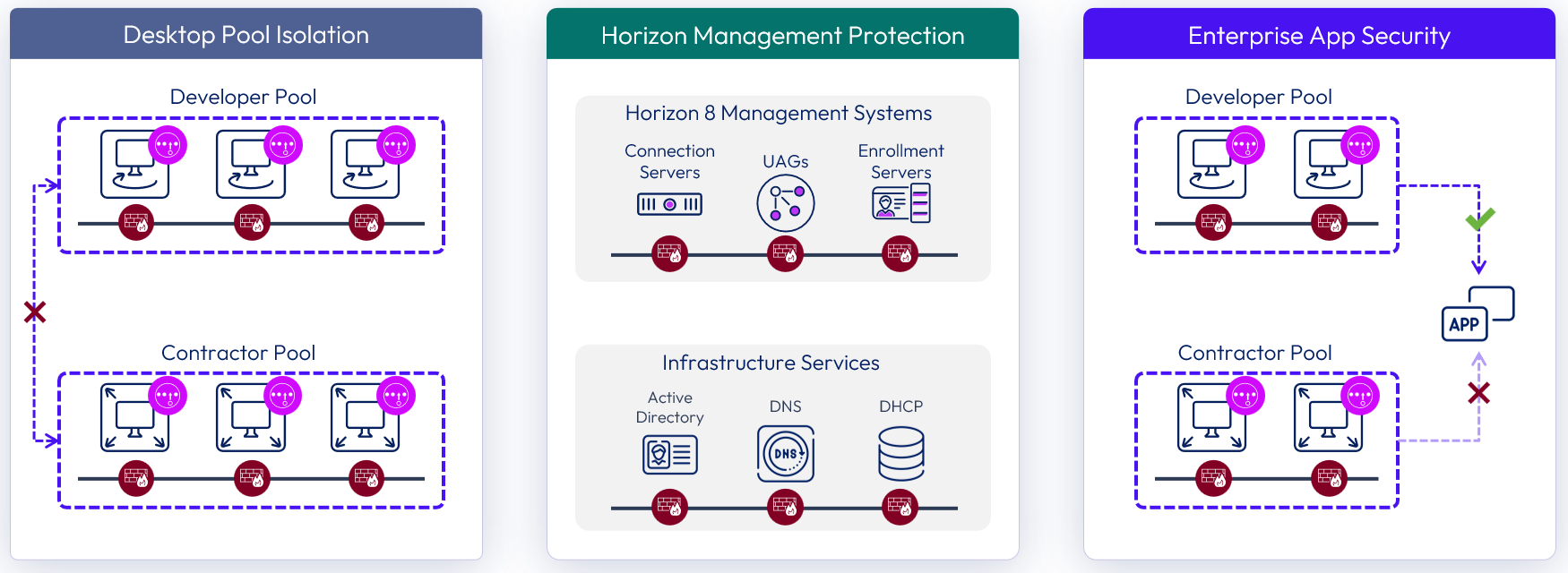

As the following figure shows, NSX performs several security functions within an Omnissa Horizon 8 deployment:

- Protects desktop pool VM communication – NSX can apply firewall policy to block or limit desktop to desktop communication, or desktop to enterprise application. Data Security Scanning (PII/PHI/PCI), 3rd party Security Services e.g. Agentless Antivirus, NGFW, IPS

- Protects EUC management infrastructure – NSX secures inter-component communication among the management components of a Horizon infrastructure.

- Protect user and Enterprise Application – NSX allows user-level identity-based micro-segmentation for the Horizon desktops and RDS Hosts used for published applications. This enables fine-grained access control and visibility for each desktop or published application based on the individual user.

- Virtual desktops contain applications that allow users to connect to various enterprise applications inside the data center. NSX secures and limits access to applications inside the data center from each desktop.

Figure 1: NSX security use cases for Omnissa Horizon 8

Micro-segmentation

The concept of micro-segmentation takes network segmentation, typically done with physical devices such as routers, switches, and firewalls at the data center level, and applies the same services at the individual workload (or desktop) level, independent of network topology.

NSX and its Distributed Firewall feature are used to provide a network-least-privilege security model using micro-segmentation for traffic between workloads within the data center. NSX provides firewalling services, within the hypervisor kernel, where every virtual workload gets a stateful firewall at the virtual network card of the workload. This firewall provides the ability to apply extremely granular security policies to isolate and segment workloads regardless of and without changes to the underlying physical network infrastructure.

Two foundational security needs must be met to provide a network-least-privilege security posture with NSX micro-segmentation. NSX uses a Distributed Firewall (DFW) and can use network virtualization to deliver the following requirements.

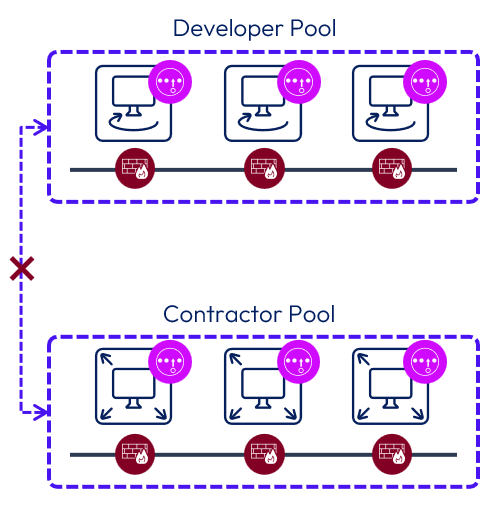

Isolation

Isolation can be applied for compliance, software life-cycle management, or general containment of workloads. In a virtualized environment, NSX can provide isolation by using the DFW to limit which workloads can communicate with each other. In the case of Horizon, NSX can block desktop-to-desktop communications, which are not typically recommended, with one simple firewall rule, regardless of the underlying physical network topology.

Figure 2: Isolation Between Desktops

Another isolation scenario, not configured in this reference architecture, entails isolating the Horizon desktop and application pools. Collections of VDI and RDSH machines that are members of specific Horizon pools and NSX security groups can be isolated at the pool level rather than per machine. Also, identity-based firewalling can be incorporated into the configuration, which builds on the isolation setup by applying firewall rules that depend on users’ Active Directory group membership, for example.

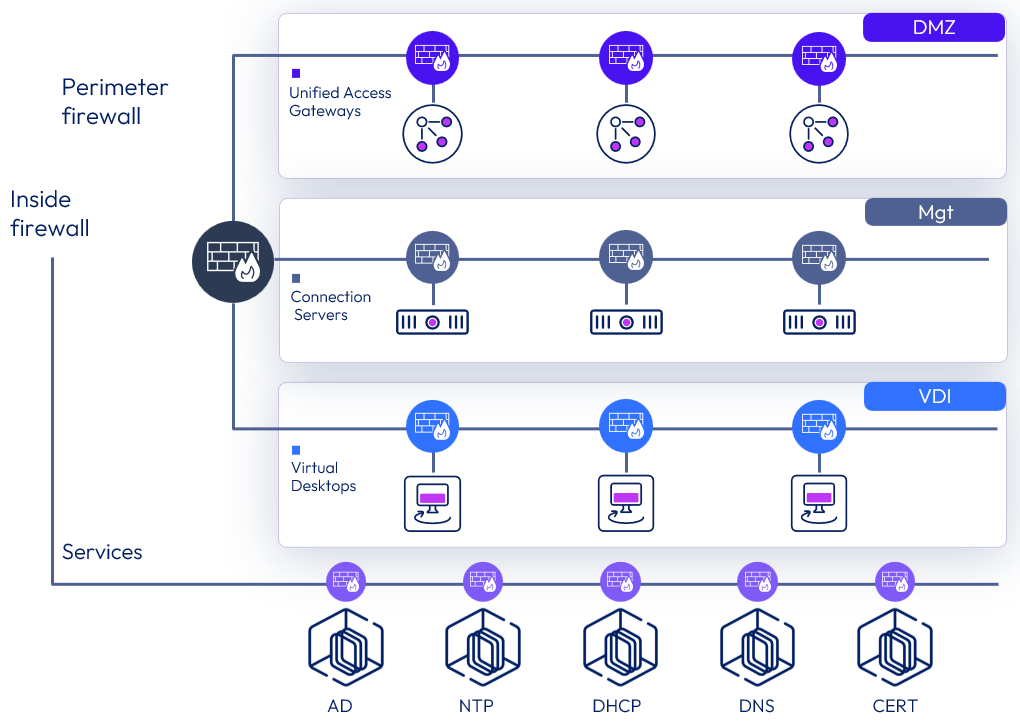

Segmentation

Segmentation can be applied at the VM, application, infrastructure, or network level with NSX. Segmentation is accomplished either by segmenting the environment into logical tiers, each on its own separate subnet using virtual networking, or by keeping the infrastructure components on the same subnet and using the NSX DFW to provide segmentation between them. When NSX micro-segmentation is coupled with Horizon, NSX can provide logical trust boundaries around the Horizon infrastructure as well as provide segmentation for the infrastructure components, and for the desktop pools.

Figure 3: NSX Segmentation

Advanced services insertion (optional)

Although we did not use this option for this reference architecture, it warrants some discussion. As one of its key functionalities, NSX provides stateful DFW services at layers 2–4 for micro-segmentation. Customers who require higher-level inspection for their applications can leverage one or more NSX extensible network frameworks, in this case NSX in conjunction with third-party next-generation firewall (NGFW) vendors for integration and traffic redirection. Specific traffic types can be sent to the NGFW vendor for deeper inspection or other services.

The other network extensibility framework for NSX is Endpoint Security, EPSec. NSX uses EPSec for capabilities leveraged to provide enhanced agentless antivirus/malware or endpoint-monitoring functions from third-party security vendors as well. This integration is optional and in Horizon deployments is beneficial for removing the need for antivirus or anti-malware (AV/AM) agents on the guest operating system. This functionality is provided by NSX through guest introspection, which is also leveraged to provide information for the identity firewall.

To implement guest introspection, a guest introspection VM must be deployed on each ESXi host in the desktop cluster. Also, the VMware Tools driver for guest introspection must be installed on each desktop or RDSH VM.

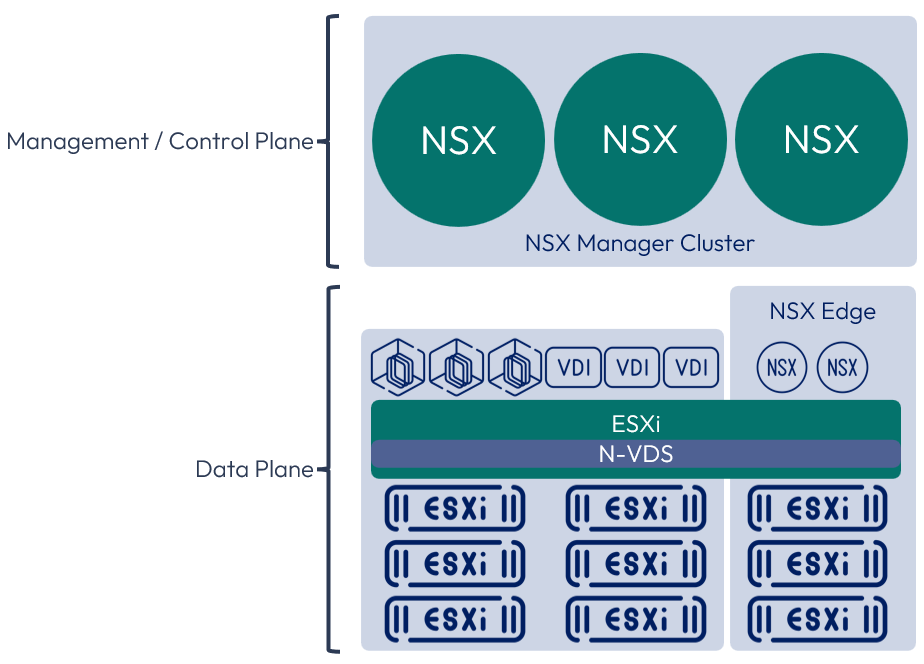

NSX components

An NSX architecture consists of the following components. This section is not meant to be an exhaustive guide for covering NSX and every component. For a more in-depth look at the NSX components and design decisions, reference the VMware NSX Documentation.

Table 9: NSX Components

| Component | Description |

| NSX Manager cluster | The NSX Manager Cluster is deployed as a virtual appliance in a set of three appliances for redundancy and scalability. The NSX Manager Cluster represents the Management and Control Plane of the NSX deployment. The NSX Manager Cluster operates a separate user-interface for administration. |

| Transport node | A Transport Node is a device that is prepared with NSX and is participating in traffic forwarding. Edge Transport Node - The NSX Edge Node can be deployed in two form factors, as a virtual appliance in various sizes (Small, Medium, and Large) and also in a bare metal form factor. The NSX Edge Nodes provide North/South connectivity as well as centralized services such as NAT, Load Balancing, Gateway Firewall and Routing, DHCP, and VPN capabilities. NSX Edge Nodes provide pools of capacity for running these services. Hypervisor Transport Node - Hypervisor transport nodes are hypervisors prepared and configured to run NSX. The N-VDS provides network services to the virtual machines running on those hypervisors. NSX currently supports ESXi and KVM hypervisors but also works on Bare Metal workloads as well. |

| NSX virtual distributed switch (N-VDS) | The N-VDS is a software switch based on the vSphere vSwitch that provides switching functionality for NSX and is installed in the hypervisor, in the case of Horizon, vSphere, and managed by NSX. The N-VDS provides the capability for building networks using overlay encapsulation/decapsulation. The N-VDS has similarities to the Virtual Distributed Switch that vCenter can build for vSphere hypervisors in concept, but is fully managed by NSX and has no dependency on vCenter or vSphere. |

| Transport zone | An NSX Transport Zone represents the span of an NSX Segment and can be either an Overlay or VLAN type. It is associated with an N-VDS which binds it to a Transport Node. |

| NSX segment | The NSX Segment is virtual Layer 2 broadcast domain. Segments are created and attached to a Transport Zone. The span of a Segment is defined by the Transport Zone. An NSX Segment can either be a type Overlay or VLAN and inherits the type from the Transport Zone of which it’s attached. An NSX Overlay Segment represents a software construct of a layer 2 broadcast domain. It can be associated with a network subnet and its default gateway is typically the tier-0 or tier-1 logical NSX router. A VLAN Segment represents a software extension of a physical layer 2 broadcast domain into the virtualized environment. The subnet ID of the underlying physical network is associated with the VLAN Segment, and the default gateway is typically the physical router that already provides the gateway in the underlay network. |

| NSX kernel modules | The NSX kernel modules are installed on each hypervisor that NSX is managing and build a distributed routing and firewalling model that scales out as a customer adds more hosts to their environment. Deploying NSX to the hypervisors installs the software packages that enable in-kernel routing and firewalling for local workloads. NSX manages these local firewalls and routing instances through the NSX Simplified UI. |

NSX is broken up into 3 basic planes, Management, Control, and Data. Each of these planes is independent from the other. Loss of function in one plane does not equate loss of function in another plane. The figure below shows the NSX logical architecture and the breakdown of the components in each plane.

Figure 4: NSX logical architecture

NSX scalability

See the VMware NSX Configuration Limits. With regard to an Omnissa Horizon 8 environment, the two most relevant sections are the Distributed Firewall and the Identity Firewall sections.

Design overview

NSX and Omnissa Horizon 8 deployments, can accommodate a wide ranges of deployment topologies and options. NSX needs to align at logical boundaries with Horizon to scale similarly. Not every customer requires a topology that scales to the Horizon Cloud Pod Architecture limits but guidance is helpful for showcasing the options. The following sections represent the three Horizon and NSX topologies that align NSX at the Horizon pod boundary as well as providing guidance for customers that fall into the sizing categories. These topologies are not meant to be comprehensive or to replace a services-led engagement recommendation but provide guidance on what would be required to build each scenario based on each platform’s provided maximums.

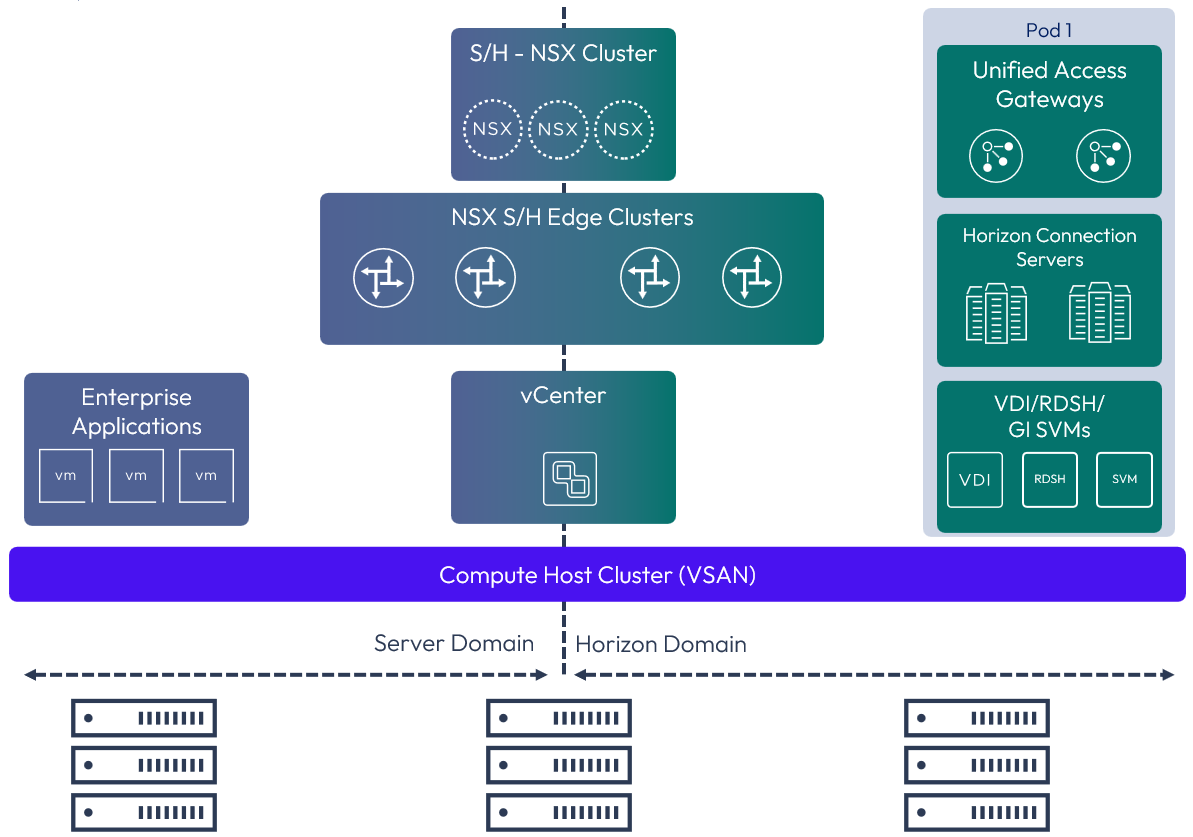

Small (converged cluster) topology

The Small topology represents a converged cluster design where the Management, Edge, and Compute Clusters of both the Server and Horizon domains reside on the same logical vSphere compute cluster. This topology would be similar to using hyper-converged hardware to run Horizon, Enterprise Servers, and the Management components where the compute infrastructure consists of a single vSphere cluster. This Horizon and NSX topology aligns from an NSX design perspective with the Collapsed Management and Edge Cluster design.

Figure 5: Example small Omnissa Horizon 8 and NSX topology

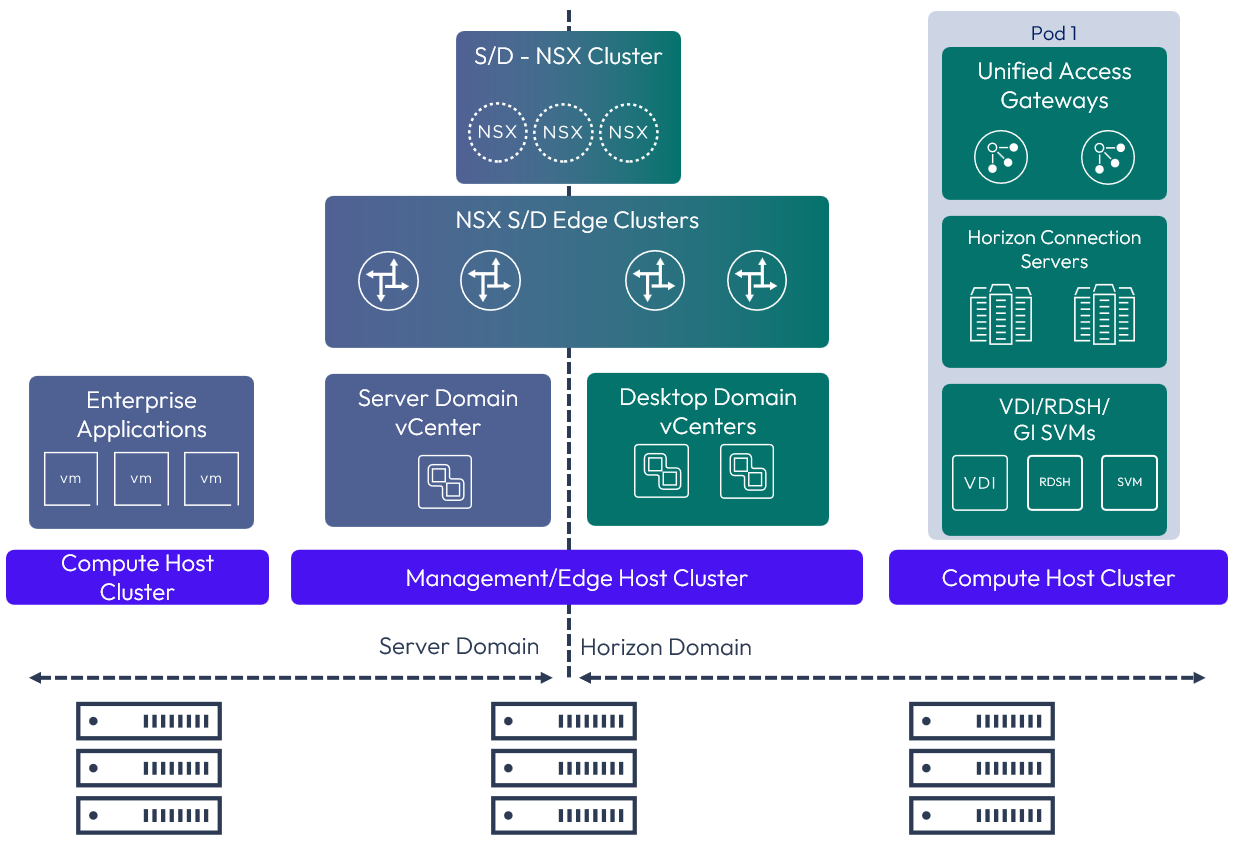

Medium Topology

The Medium topology represents the physical splitting of the management, edge, and compute clusters. This Horizon and NSX topology aligns, from an NSX design perspective, with the collapsed management and edge cluster design, into one cluster to minimize the amount of hardware required for deployment.

Figure 6: Medium Omnissa Horizon 8 and NSX topology

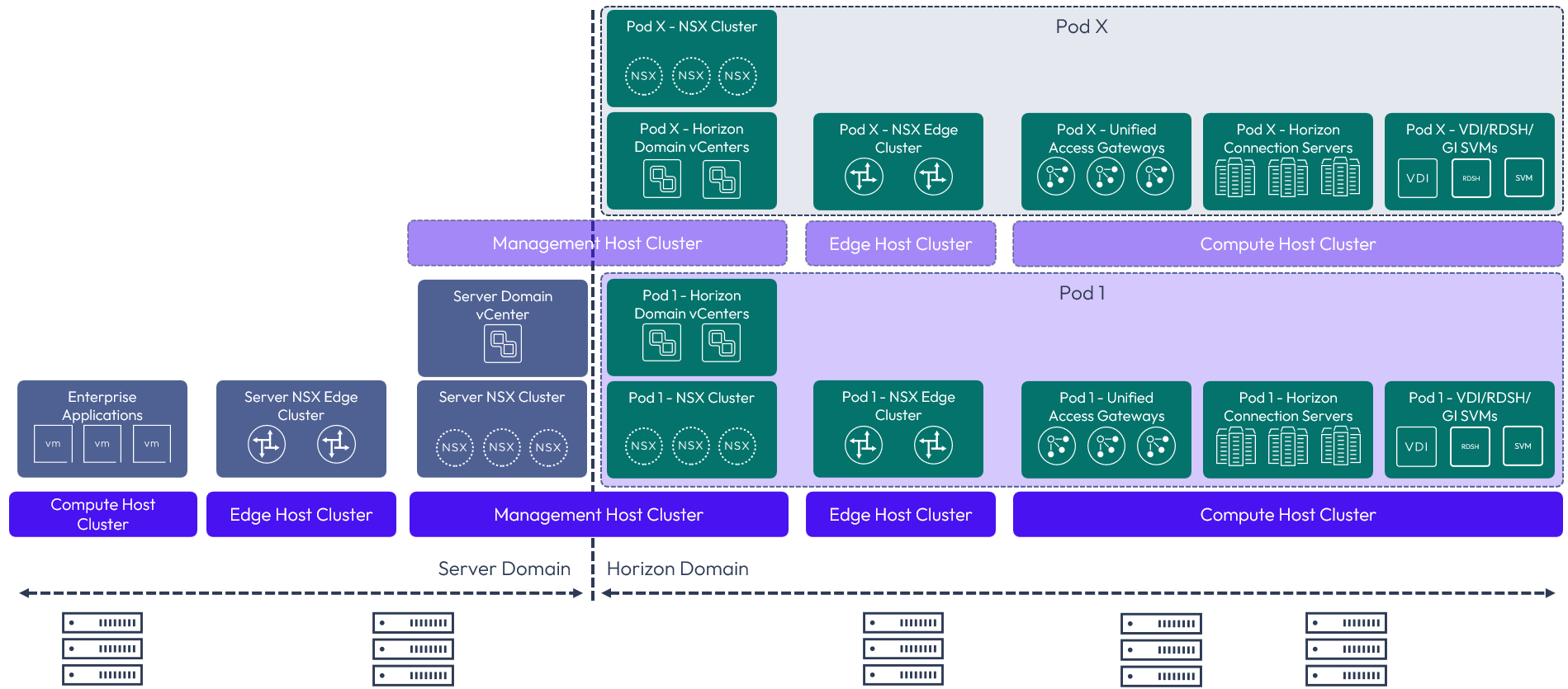

Large topology

The large topology represents the scalable Horizon and NSX deployment for the large deployments and ability to support multiple Horizon pods. This Horizon and NSX topology aligns from an NSX design perspective with the enterprise vSphere-based design. All clusters are split out completely and separately, from each other, to isolate fault domains for each aspect of the design.

The management clusters for multiple pods could be collapsed and share the management cluster for domains to reduce the overall hardware needed and reduce some complexity of the design. As the scale of an environment increases with more pods, or to spread the fault domain, this could also be split out into separate management clusters.

While this design does require more hardware to accomplish, it provides maximum and independent scalability for large Horizon deployment needs.

Figure 7: Large Omnissa Horizon 8 and NSX topology

Table 10: Design strategy for NSX

| Decision | The medium topology was used. |

| Justification | Each location was sized for an 8,000 user Horizon environment in a single Horizon pod. Separate vSphere clusters are already in place for the management components and for the Horizon desktops. The medium topology can easily be scaled to a large topology if the size of the environment dramatically increases. |

Microsoft Azure environment infrastructure design

In this reference architecture, multiple Azure regional data centers were used to demonstrate multi-site deployments of Horizon Cloud Service on Microsoft Azure. We configured infrastructures in two Microsoft Azure regions (US East, US East 2) to facilitate this example.

Each region was configured with the following components.

Table 11: Microsoft Azure infrastructure components

| Component | Description |

| Management VNet | Microsoft Azure Virtual Network (VNet) configured to host shared services for use by the Horizon Cloud deployments. |

| VNet peer (management to pod) | Unidirectional network connection between two VNets in Microsoft Azure. Both the Allow forwarded traffic and Allow Gateway Transit options were selected in the VNet configuration to ensure proper connectivity between the two VNets. |

| VNet peer (pod to management) | Unidirectional network connection between two VNets in Microsoft Azure. Both the Allow Forwarded Traffic and the Allow Gateway Transit options were selected in the VNet configuration to ensure proper connectivity between the two VNets. |

| Microsoft Azure VPN Gateway | VPN gateway resource provided by Microsoft Azure to provide point-to-point private network connectivity to another network. |

| Two Microsoft Windows Server VMs | Two Windows servers provide redundancy in each Microsoft Azure region for common network services. |

| Active Directory domain controller | Active Directory was implemented as a service on each Windows server. |

| DNS server | DNS was implemented as a service on each Windows server. |

| Windows DFS file share | A Windows share with DFS was enabled on each Windows server to contain the Dynamic Environment Manager profile and configuration shares. |

| Horizon Cloud control pod VNet | Microsoft Azure VNet created for use of the Horizon Cloud pod. This VNet contains all infrastructure and user services components (RDSH servers, VDI desktops) provided by the Horizon Cloud pod. |

We also leveraged two separate Microsoft Azure subscriptions to demonstrate that multiple pods could be deployed to different subscriptions and managed from the same Horizon Cloud Service with Microsoft Azure control plane. For more detail on the design decisions that were made for Horizon Cloud pod deployments, see Horizon Cloud Service - Architecture.

Network connectivity to Microsoft Azure

You do not need to provide private access to Microsoft Azure as a part of your Horizon Cloud on Microsoft Azure deployments. The Microsoft Azure infrastructure can be provided from the Internet.

There are several methods for providing private access to infrastructure deployed to any given Microsoft Azure subscription in any given Microsoft Azure region, including by using a VPN or ExpressRoute configurations.

Table 12: Implementation strategy for providing private access to Horizon Cloud Service

| Decision | VPN connections were leveraged. Connections were made from the on-premises data center to each of the two Microsoft Azure regions used for this design. |

| Justification | This is the most typical configuration that we have seen in customer environments to date. See Connecting your on-premises network to Azure for more details on the options available to provide a private network connection to Microsoft Azure. |

Microsoft Azure Virtual Network (VNet)

In a Horizon Cloud Service on Microsoft Azure deployment, you are required to configure virtual networks (VNets) for use by the Horizon Cloud pod. You must have already created the VNet you want to use in that region in your Microsoft Azure subscription before deploying Horizon Cloud Service.

Note that DHCP is a service that is a part of a VNet configuration. For more information on how to properly configure a VNet for Horizon Cloud Service, see Configure Network Settings for Microsoft Azure Regions. Another useful resource is the Requirements Checklist for Deploying a Microsoft Azure Edge.

Summary and additional resources

Now that you have come to the end of this integration chapter, you can return to the reference architecture landing page and use the tabs, search, or scroll to select further chapter in one of the following sections:

- Overview chapters provide understanding of business drivers, use cases, and service definitions.

- Architecture chapters give design guidance on the Omnissa products you are interested in including in your deployment, including Workspace ONE UEM, Access, Intelligence, Workspace ONE Assist, Horizon Cloud Service, Horizon 8, App Volumes, Dynamic Environment Manager, and Unified Access Gateway.

- Integration chapters cover the integration of products, components, and services you need to create the environment capable of delivering the services that you want to deliver to your users.

- Configuration chapters provide reference for specific tasks as you deploy your environment, such as installation, deployment, and configuration processes for Omnissa Workspace ONE, Horizon Cloud Service, Horizon 8, App Volumes, Dynamic Environment Management, and more.

Changelog

The following updates were made to this guide:

| Date | Description of Changes |

| 2025-04-01 |

|

| 2024-10-07 |

|

| 2024-05-29 |

|

| 2023-07-24 |

|

Author and contributors

This chapter was written by:

- Graeme Gordon, Senior Staff Architect, Omnissa.

Feedback

Your feedback is valuable. To comment on this paper, either use the feedback button or contact us at tech_content_feedback@omnissa.com.